The Effective Altruism movement is a philosophy and social movement that applies evidence and reason to determine the most effective ways to benefit others. In recent years, organizations like GiveWell and the Bill & Melinda Gates Foundation have helped to popularize the core concepts of Effective Altruism.

They support the idea that charity should be done with a strictly analytical mindset. Under the assumption that all living creatures have some level of sentience, Effective Altruism tries to minimize the sum of all conscious suffering in the long-run. Pretty straightforward.

This problem usually reduces to some basic number crunching on the ways in which people suffer and the cost necessary to mitigate that suffering. For example, it costs about $40,000 to train a seeing eye dog to help a blind person live their lives. It also costs about $100 to fund a simple surgery which would prevent somebody from going blind. It should be self evident that resources are limited and that all people’s suffering should be weighted equally. So choosing to spend limited resources on a seeing eye dog is considered immoral because it would come at the cost of ~400 people not getting eye surgery and losing their vision.

This sort of utilitarian thought is fairly intuitive. To help quantify reduction of suffering across a diverse set of unique actions, health economists and bioethicists defined the Quality-Adjusted Life Year (QALY), a unit measuring longevity, discounted for disease. A perfectly healthy infant may expect to have 80 QALYs ahead of them, but if that child were born blind, they may have, say, 60 QALYs ahead of them (in this made-up example, blindness causes life to be 75% as pleasant as a perfectly healthy life).

Traditional charity tends to be locally focused—you’d deliver meals for elderly people in your town or support a soup kitchen for the homeless. Considering Effective Altruism principles, however, you’d probably come to the conclusion that you can almost always save more QALYs from disease by funding health problems in impoverished African or Asian areas. In general, the more analytical you are in your giving, the more you choose to spend on this sort of giving opportunity.

As philosophers become more and more rigorous in their approach to Effective Altruism, you’d expect them to continue tending towards provably high-impact spending opportunities. But many moral philosophers actually argue that we should instead focus our attention towards mitigating existential risk, dangers that could potentially end human civilization (think doomsday asteroid collisions, adversarial AI, bio-weapons, etc.).

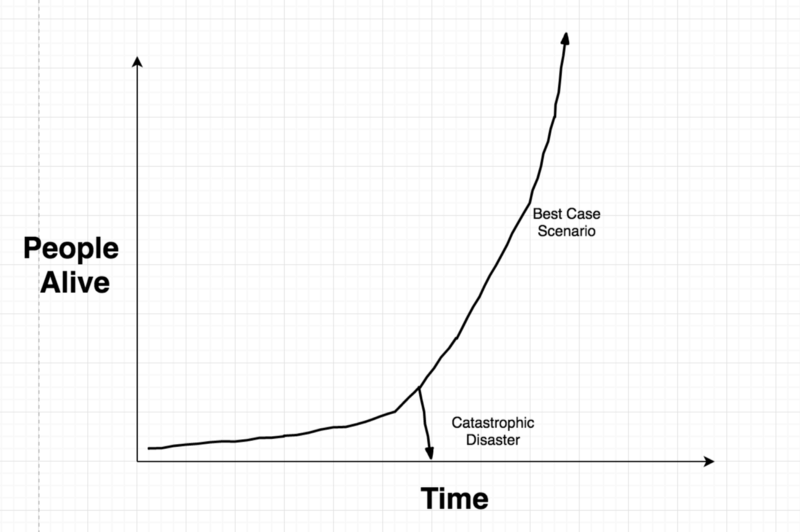

Here’s the basic argument: when trying to maximize the sum of positive sentient experiences in the long run, we need to consider what “long run” could actually mean. There are two cases. 1) humanity reaches a level of technological advancement that removes scarcity, eradicates most diseases, and allows us to colonize other planets and solar systems over the course of millions/billions of years and 2) humanity becomes extinct due to some sort of catastrophic failure or slow resource depletion.

In the first case, humans would live for millions/billions of years across thousands of planets, presumably with an excellent quality of life because of the advanced technology allowing this expansion. Call this 10²³ QALYs (1bn years * 1k planets * 1bn people per planet * 100 years of life per person). Of course this scenario is unlikely—a lot of things need to go right in the next several thousand years for this to happen. But no matter how small the odds, it’s clear that the potential for positive sentient experience is unfathomably large.

It’s worth noting that in the second case, the upper limit is likely on the scale of thousands of years. Philosophers argue that by that time we’ll have colonized other planets which significantly decreases the risk of any given disaster affecting the entire human race. So our second case future-QALY estimate is about 10¹⁶ (10bn human lives * 10k years before extinction * 100 years per life).

Given these rough estimates, we can do some quick algebra to find the probability threshold that would make it worthwhile to spend money mitigating existential risk: 10¹⁶ / 10²³ = 0.0000001. So if the chance of some catastrophic disaster is more than one in ten million, it’s more cost-effective to mitigate that risk than support the lives of people currently suffering.

So how do the best-estimate numbers actually work out? The Oxford Future of Humanity Institute guessed there’s a 19% chance of extinction before 2100. This is a totally non-scientific analysis of the issue, but interesting nonetheless. The risks of non-anthropogenic (human caused) extinction events are a little easier to quantify—based on asteroid collision historical occurrences and observed near-misses, we can expect mass (not necessarily total) extinction causing collisions to happen once every ~50 million years.

A compelling argument supporting a non-negligible chance of extinction is the Fermi Paradox. If intelligent life developed somewhere else in the galaxy, it would only take a few million years to travel across the entire galaxy and colonize each livable solar system. That’s not much time on cosmic and evolutionary scales, so where are the aliens? Either we’re the first life form to civilize, or all the others died out. Many astronomers studying this topic think the latter case is more likely and we have no reason to say we’re any different.

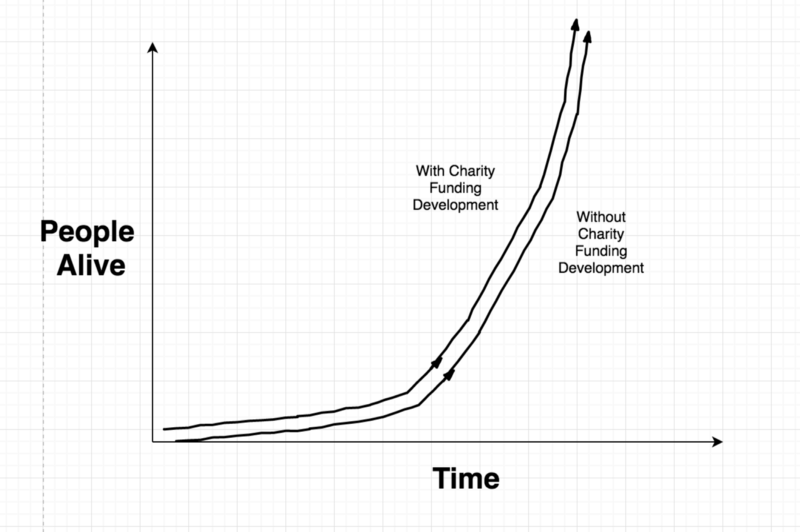

Regardless, there’s an uncomfortable amount of uncertainty surrounding the likelihood of existential global catastrophes. Although the philosophical and mathematical underpinnings of this idea are well understood, nobody knows how to pick the right numbers. Since it’s so hard to imagine what the right probabilities are, it can be argued that we should hedge against the worst-case downside. Traditional charity focuses on eliminating poverty and health problems which only accelerate the course of human development. This choice can be visualized:

These pictures are good at explaining the magnitude of risk involved and the sentiment of those that argue for funding Existential Risk research over traditional charity.

So how should you choose to effectively allocate your resources to do good? That’s still a tough question. I’d highly recommend reading The Most Good You Can Do. Most folk involved in the Effective Altruism movement (myself included) would suggest supporting GiveWell or The Centre for Effective Altruism. But if the idea explained in this essay is powerful enough, consider the Centre for the Study Of Existential Risk.

Thoughts? Tweet me at @whrobbins or find my email at willrobbins.org!